Understanding Transformer Architectures

The Transformer architecture has truly dominated the realm of natural language processing and machine learning. Ever since its introduction in the seminal paper “Attention is All You Need” by Vaswani et al. Transformers have dominated the backbone of many state-of-the-art models like BERT, GPT, and T5. The architecture’s ability to model long-range dependencies efficiently and its inherent parallelism have made it a breakthrough in sequence-to-sequence modelling. This blog discusses the basic building blocks of the Transformer architecture, the concepts behind them, and their applications.

The Problem with Traditional Architectures

Before Transformers, the traditional models in use for sequential data like text were RNNs (Recursive Neural Networks) or LSTM (Long Short-Term Memory) networks. In these models, the following were other deficiencies:

- Sequential Dependency: Processing in an RNN is sequential, preventing parallelism and significantly increasing training time.

- Vanishing Gradient Problem: Long sequences cause gradients to either vanish or explode, and hence dependencies are difficult to learn beyond such large spans.

- Limited Context: Even though bidirectionality in LSTMs may have helped improve the situation a bit, traditional models had a severe drawback in dealing with long-term dependencies.

Transformers overcome these issues with a new mechanism called self-attention, making it easier to handle long sequences while also supporting parallel processing.

The Transformer Architecture: A Bird's Eye View

The Transformer uses the encoder-decoder architecture. Both components are stacked layers, and in each layer, there are two primary components:

- Multi-Head Self-Attention Mechanism

- Position-Wise Feed-Forward Neural Network

Encoder and Decoder Architecture

- Encoder: There are multiple identical layers consisting of two main sub-layers:

- Multi-Head Self-Attention

- Feed-Forward Network

Each sub-layer has a residual connection around it, along with layer normalization. These layers are passed through the input sequence while focusing on capturing rich, contextualized representations.

- Decoder: A decoder is essentially the same as an encoder but with a minor addition in the form of a sub-layer of masked self-attention. Therefore, no information leaks from tokens into the future because it trains for the autoregressive tasks. Every decoder consists of:

- Masked Multi-Head Self-Attention

- Encoder-Decoder Attention

- Feed-Forward Network

Key Concepts in Transformer Models

1. Self-Attention Mechanism

The mechanism of self-attention enables the Transformer to weigh the relevance of each word in the input sequence relative to every other word. This way, it can capture their relationships without dependence on any kind of sequential dependency. The mechanism works as follows:

Three vectors are computed for every word in the sequence:

- Query Q- What to look for.

- Key K- How to match against queries.

- Value V- The context to use.

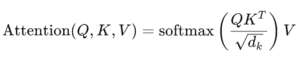

The attention score that is the result of dot product of Query and the Key, scaled by the square root of the dimension of the key; finally, a softmax must be applied. This score allows determining the relative importance of each word in the sequence regarding other words, so the model will pay closer attention to relevant parts of the text.

2. Multi-Head Attention

Instead of using only one head of attention, multi-head attention is used in the Transformer to capture different relationships at various subspaces of the input. Each head learns to focus on different parts of the sequence, and the outputs are concatenated and linearly transformed.

3. Positional Encoding

In the case of the Transformer, since it does not have any form of sequential structure as with the case of RNNs, it must learn the order of words from their positional encodings. Positional encodings are added to input embeddings as a way of injecting the information about the word positions using sine and cosine functions.

4. Feed-Forward Networks

After attention the representations are passed to point-wise feed-forward network comprised of two linear transformations with ReLU activation in between the two. The operation is applied position-wise and allows the model flexibility in its ability to represent.

5. Residual Connections and Layer Normalization

Residual Connection Each sub-layer is wrapped by a residual connection followed by layer normalization, which stabilizes training and accelerates convergence. This can help learn deeper representations.

Training and Optimization

The goal of the training varies depending upon the task at hand.

- Some concrete examples of typical goals are:

- Machine Translation: Employ a cross-entropy loss between the predicted and actual sequences.

Language Modelling: Predict the next word given the preceding words.

Techniques like label smoothing, learning rate warm-up, and dropout regularization can assist in generalization.

Variants and Extensions of Transformer

Over the years, numerous variants of the Transformer architecture have been implemented to further enhance efficiency and applicability:

- BERT (Bidirectional Encoder Representations from Transformers): This only employs the encoder stack for pre-training the language models.

- GPT (Generative Pre-trained Transformer): Only uses the decoder stack for writing coherent and reasonable texts.

- T5 (Text-to-Text Transfer Transformer): Frames all NLP problems in one framework, that is text-to-text, unifying the training paradigms.

- ALBERT, RoBERTa, DistilBERT: Optimized versions of BERT with different architectural changes.

Applications of Transformer Models

Transformers have found applications in various things:

- Natural Language Processing: Machine translation, text summarization, question answering, and sentiment analysis.

- Vision: Vision Transformers (ViT) apply the exact same architecture to image patches, achieving performance on image classification tasks.

- Speech Processing: The architecture of transformers is implemented in ASR (Automatic Speech Recognition) and TTS (Text-to-Speech) systems.

Future of Transformer Architectures

Due to rapidly increasing transformer research, some future trends could be:

- Lower Computational Cost and Memory Usage: For this, research has been scaled down in reducing computation cost and the memory usage, especially through the development of Longformer and Performer.

- Multimodal Transformers: They will be inducted to incorporate inputs in text, vision, as well as speech by formulating unified models for learning many data types.

- Transfer Learning: Now, the massive models are considerably pre-trained using Transformers and have emerged as the core architecture for GPT-4 in few-shot and zero-shot learning cases.

Conclusion

The Transformer architecture has truly revolutionized the fields of NLP and deep learning, providing a robust framework for handling sequential data and pushing the boundaries of what machine learning models can achieve. By overcoming the limitations of traditional RNNs and LSTMs, Transformers have introduced novel mechanisms like self-attention and positional encoding, making them indispensable for a variety of tasks. With continued advancements and adaptations such as BERT, GPT, and T5, Transformers will remain at the forefront of AI research, driving innovation across natural language, vision, and multimodal domains for years to come.

By – Kumar Kanishk